Last week driverless technology had a big week in the news as Google announced that its self-driving car project has become advanced enough that over the past year they’ve “shifted the focus of the Google self-driving car project onto mastering city street driving”.

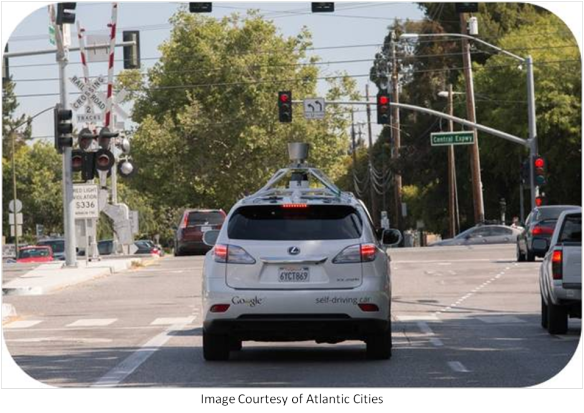

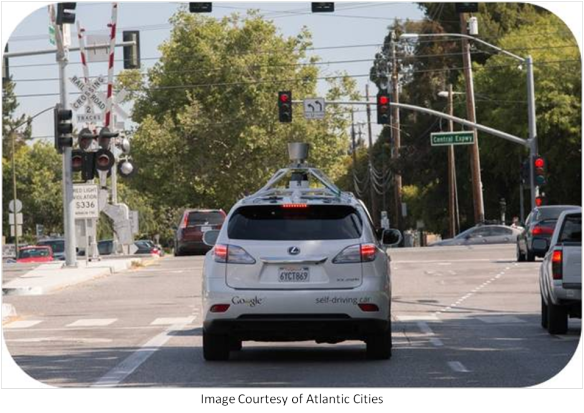

Eric Jaffe of Atlantic Cities took a ride with Google’s Dimitri Dolgov, the car’s software lead and in his article Jaffe described the ride as “amazingly smooth.” Here’s how Jaffe described part of the trip.

“We go through a yellow light, the car having calculated in a fraction of a second that stopping would have been more dangerous. We push past a nearby car waiting to merge into our lane, because our vehicle’s computer knows we have the right-of-way. We change into the right lane for a seemingly pointless reason until, a minute later, the car signals a right turn. We go the exact speed limit because maps the car consults tell it this road’s exact speed limit. The car identifies orange cones in the shoulder and we drift laterally in our lane, to give any road workers more space.

Between you and me: amazingly smooth.”

For Google’s engineers, a safe and boring trip is now expected. Google’s twenty-four self-driving cars have driven over 700,000 miles since 2009 with only two minor accidents, both due to driver error. The car has been put through its paces on the highway Google decided to start testing on unpredictable city and suburban streets. Twice during Jaffe’s ride along Dolgov had to take manual control of the car, a relatively high number for a test drive and a source of frustration for the engineers. The fact that the car was completely on its own the rest of the time however illustrates just how far this project has come. Two years ago, the Google car couldn’t handle half the situations it could now and the situations it still can’t handle are detected fast enough for the car to stop safely or the driver to take over, a clear illustration of Google’s “safety first” attitude. But what makes Google’s driverless car driverless? What systems are responsible for this outstanding achievement?

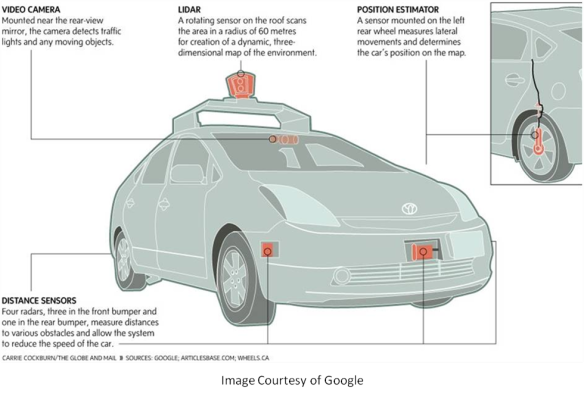

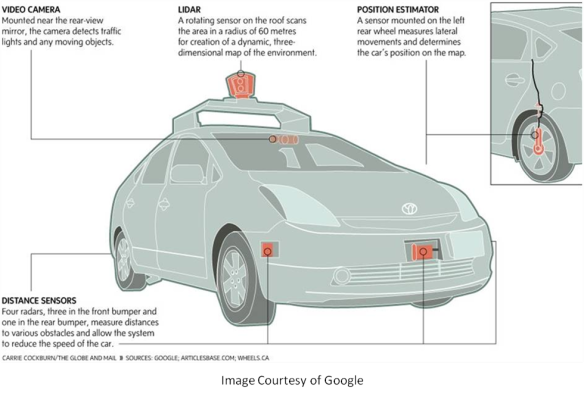

The first part of the Google Car technology is the LIDAR system (Laser Imaging Detection and Ranging). This system is essentially a laser radar composed of a rangefinder and a Velodyne 64 beam laser. It has the appearance of a large gray bucket mounted atop the Google Car. This “bucket” contains those 64 lasers and spins them around 10 times a second. The rangefinder determines how far away the car is from something by measuring how long it takes for the LIDAR’s lasers to hit an object, bounce off and return to the system. The LIDAR is used in conjunction with pre-loaded maps of the test area constructed in painstaking detail by Google’s engineers using Google Maps and Street View. These maps cannot account for mobile objects like LIDAR can but give the positions of all static objects along the current test drive and that gives the car a good idea of what to expect. Taken together, the LIDAR and maps let the car see like this:

In the picture above the car encounters a set of traffic cones during Jaffe’s ride along. The car wasn’t able to navigate past the roadwork but it saw the obstacle and came to a safe stop before hitting it. All of this footage is recorded on a laptop that the Google engineer riding with the backup driver has with a comment box to record anything interesting or to flag any major issues.

Other important components of the Google Car include GPS, cameras and radar. GPS (Global Positioning System) devices, such as portable units and those in smartphones, communicate with dedicated satellites in orbit to determine their location. It can deliver a driver within several meters of their intended destination, enough for their eyes and ears to take over. The Google Car has LIDAR, radar and cameras for its eyes and ears but GPS gives it a big picture. This is essential for long term navigation.

LIDAR can give the shape of all objects around the car but short range digital cameras allow the car to read things, like road signs. Radar has a similar job as the cameras and LIDAR but at much longer range, up to 160 meters in any direction to be exact. Radio waves are emitted from the radar, hit a solid object and bounce back to give an idea of how far the object is, how big it is and where it’s likely to move. This preps the car for non-static obstacles its camera and LIDAR can’t see yet. The Google Car’s software is sophisticated enough to use all of these tools to create a safe, smooth ride and Jaffe describes later in his article how the car performed.

“The car then passed a few more staged tests. We slowed for a group of jaywalkers and a rogue car turning in front of us from out of nowhere. We stopped at a construction worker holding a temporary STOP sign and proceeded when he flipped it to SLOW — proof the car can read and respond to dynamic surroundings, making it less reliant on pre-programmed maps. We merged away from a lane blocked by cones not unlike the one that stumped us earlier.”

We hope this article gives you a good overview of what this amazing technology can do and how far along it is. In the coming blogs, we plan to go into much greater detail about the individual systems, their history of development and how they work.